- Why is there a write speed difference between dd, cp, rsync and macOS Finder to a SMB3 drive? Ask Question. Copy from Mac client to Mac client. Both have SSDs (MacPro writes with 250 MB/s to own disc, MacBook Pro with 300 MB/s). We use rsync to copy data from one file server to another using NFS3 mounts over a 10Gb link.

- Copying large files using Rsync on Mac between a NAS and a local hard drive. Ask Question. So you are running the Rsync command on your mac, the Synology NAS is your source data drive and the external hard drive is your destination backup drive, correct? Experiment with ways to copy that data from the Synology NAS to the Mac OS X share.

There’s a lack of good file copy utilities on Mac like there is for Windows (eg. If I need to copy a bunch of files where I’m likely to come across errors copying, I’ll use rsync! This guide covers how to copy files on a Mac using an external drive or any connected network drive.

I am running a regular backup using Rsync for one of my data servers. I am backing up the data which is on my Synology NAS Storage to an External Hard drive over the network and using a gigabit connection. I have got around 4TB worth of data to backup every day and I am using the following rsync command:

but using this command is taking very long for the data to backup. Can someone please suggest a quick way of doing it through Rsync.

JakeGould1 Answer

You state this:

I am running a regular backup using Rsync for one of my data servers. I am backing up the data which is on my Synology NAS Storage to an External Hard drive over the network and using a gigabit connection.

The problem here is not Rsync or network infrastructure but rather the framework of the procedure you are dealing with.

When you run Rsync—or do any file system operations—on a local disk, the OS has direct access to that the file system information to do whatever it has to do.

But if you are running your command off of your Mac OS X machine which has a network connection to them copy to an external hard drive this is what happens:

- When you run the command on your Mac OS X machine, what happens is the OS actually has to reach out over the network to the network volume before it makes any copies to simply get basic file system info since none of that info is local to your OS’s file system.

- Only after that file system info is finally received by whatever process requested it are those file system actions actually executed to act on the data.

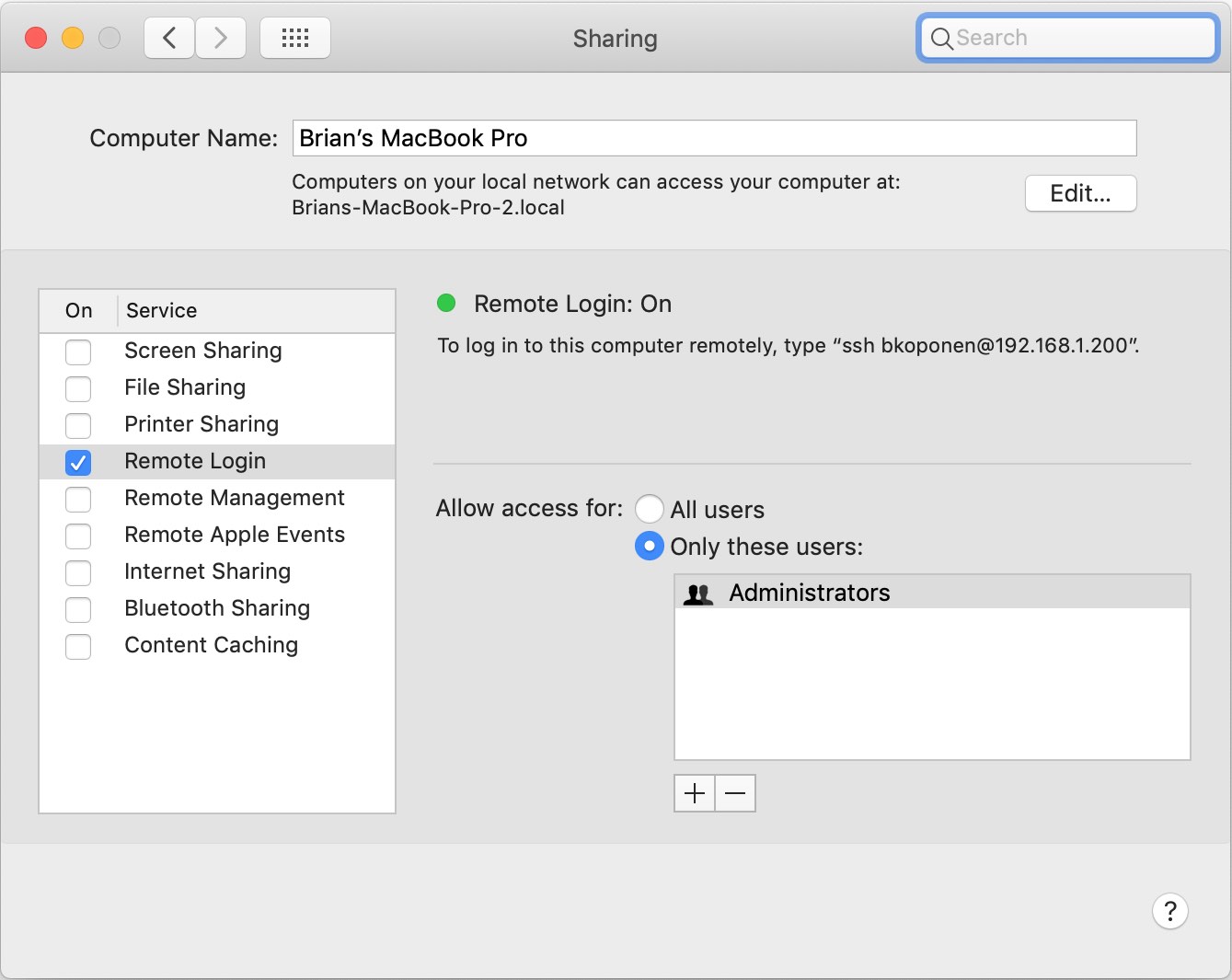

So using a setup like this will seemingly take forever. The better option is to somehow login to your Synology NAS and then have it connect to that external hard drive as a remote share and then run the Rsync command—or some equivalent—directly from the Synology NAS itself. A rough starrier could be something like this:

- You create a network share on your Mac OS X machine that is mapped to the external hard drive.

- You setup login credentials on the Synology NAS via the DSM software interface.

- Once you have the Synology NAS setup to mount the network share on that Mac OS X machine that is mapped to the external hard drive, then on that Synology NAS run some Rsync command.

I have worked with Synology’s DSM software in the past and I know there is either some built in backup software on the system that is either explicitly Rsync or something that works like Rsync. But in general, you need the Synology NAS itself to manage the process if you want to get real speed out of this setup.

JakeGouldJakeGouldNot the answer you're looking for? Browse other questions tagged macosbackuprsync or ask your own question.

Tl;dr – We can't find the reason for the limited write speed of 60 MB/sec to our NAS via SMB and AFP from two different Mac clients. In comparison: An old Windows 7 laptop in the same network writes steady 100 MB/sec.

If you read this question for the first time, please skip to the Update 4 section. rsync is the main reason for the low speed, even though we don't understand why (for a single file!).

Original Question: Find speed bottleneck SMB3/NAS with Mac OS 10.11.5 and above

We tested via rsync --progress -a /localpath/test.file /nas/test.file on macOS and the copy info of Windows.

The NAS is a DS713+ running their current DSM 6.0.2 (tested with 5.x too), with two HGST Deskstar NAS SATA 4TB (HDN724040ALE640) in RAID1 with only gigabit ethernet components and new ethernet cables (at least Cat5e).

Mac clients first only made 20 MB/sec. But applying the signing_required=no fix (described here) pushed the write speed to 60 MB/sec via SMB2 and SMB3. AFP also delivers around 60 MB/sec. Result varies around 5 MB/sec depending on protocol and (Mac) client.

What we've already tried:

Network

- Tested network performance via iperf3. Result: 926 Mbit/s. Looks good.

- Tried Dual Link Aggregation/Bonded network interfaces. No change.

- Increased MTU to 6000 and 9000. No change.

- Checked all cables. All fine at least Cat5e, in good condition.

Disks

- Checked S.M.A.R.T. Looks healthy.

- Tested write speed directly to disk with

dd if=/dev/zero of=write.test bs=256M count=4with variousbsandcountsettings (128/8, 512M/2, 1024/1). Result: around 120 MB/s (depending on block size/count)

SMB/AFP

Benchmarked SMB2, SMB3 and AFP against each other. About equal.

See update below: Used wrong method to rule out the SMB implementation of macOS. SMB on Windows is faster, new SMB settings coming with macOS 10.11 and 10.12 may be the reason.- Tried to tweak the SMB settings, including the socket options (following this instruction)

- Tried different version of delayed ack settings and

rsync --sockopts=TCP_NODELAY(comments)

No significant change of the write speed. We double checked that the config was really loaded and we were editing the right smb.conf.

System

- Watched CPU and RAM load. Nothing maxes out. CPU around 20%, RAM about 25% during transfer.

- Tested the same NAS with DSM 5.x.x in a nearly out-of-the-box setup. No additional software installed. Note: We have two of these in different locations. They are in sync via Synology's CloudSync. Same result.

- Deactivated everything unnecessary which could draw system resources.

We think this is a rather default setup, no fancy adaptions, clients or network components. According to the metrics Synology publishes the NAS should perform 40 MB/s to 75 MB/s faster. But we just can't find the bottleneck.

Clients/NAS

The Mac clients are a MacPro 5,1 (standard wired NIC, running 10.12.3 (16D32)) and a MacBookPro10,1 (Thunderbolt network adapter, running 10.11.6) only about 2m cable away from the NAS, running over the same gigabit switch as the Windows laptop in the test.

We have two of these NASes in different locations and the results are identical. The seconds NAS is more or less factory default (not even 3rd party software installed). Just two RAID1, EXT4 formatted disks syncing to the other NAS via Synology CloudSync. We've gone as far as directly connecting to the NAS without the switch, same result.

Important Update

The method used to rule out the SMB implementation of macOS/OS X was wrong. I tested it via a virtual machine, assuming it would use its own version of SMB, but obviously the traffic gets handed to macOS, running through its version of SMB.

Using a Windows laptop I've now been able to achieve an average of 100 MB/s. Indicating the SMB implementation/updates coming with 10.11 and 10.12 may cause the poor performance. Even if signing_required is set to no.

Would be great if someone could point out some further settings that may have changed with the updates and could affect the performance.

Update 2 – new insights

AndrewHenle pointed out in the comments that I should investigate the traffic in detail using Wireshark for more insight.

I therefore ran sudo tcpdump -i eth0 -s 65535 -w tcpdump.dump on the NAS, while transferring two test files one with 512 MB and one with 1 GB. And inspected the dump with Wireshark.

What I found:

- Both OS X and Windows seem to use SMB2 although SMB3 is enabled on the NAS (at least according to Wireshark).

- OS X seems to stick with the MTU. The packets have 1514 bytes leading to way more network overhead and sent packets (visible in the dumps).

- Windows seems to send packets up to 26334 bytes (if I read the data correctly! Please verify.) even if the MTU shouldn't allow that, since it's set to 1500 on the NAS, the maximum setting would be 9000 there (Synology also uses the 1500 setting in their tests).

- Trying to force macOS to use SMB3 by adding

smb_neg=smb3_onlyto the /etc/nsmb.conf didn't work or at least didn't lead to faster transfers. - Running

rsync --sockopts=TCP_NODELAYwith various combinations of TCP delayed ack settings (0 to 3) had no effect (Note: I ran the tcpdump with the default ack setting of 3).

I've created 4 dumps as .csv files, 2 while copying 512 MB (test-2.file) and 2 while copying 1024 MB (test.file). You can download the Wireshark exports here (25.2 MB). They are zipped to save space and named self-explanatorily.

Update 3 – smbutil output

Output of smbutil statshares -a as requested by harrymc in the comments.

Note on this: I'm sure SIGNING_SUPPORTED being true here doesn't mean the setting in the config doesn't work. But only that it is supported by the NAS. I've triple checked that changing the signing_required setting in my config has an effect on the write speed (~20 MB/s when tuned on, ~60 MB/s when off).

Update 4 – Samba Wars: A New Hope

It feels somewhat embarrassing, but the main problem here – again – seems to be the measurement.

Turns out rsync --progress -a costs about 30 MB/s of writing speed. Writing with dd directly to the SMB share and using time cp /local/test.file /NAS/test.file are faster at about 85-90 MB/s and apparently the fastest way to copy is the macOS Finder at around 100 MB/s (which is also the method hardest to measure, since there is no timing or speed indicator – who needs that, right? o_O). We measured it by first copying a 1 GB file and then a 10 GB file, using a stopwatch.

What we've tried since the last update of this question.

- Copy from Mac client to Mac client. Both have SSDs (MacPro writes with 250 MB/s to own disc, MacBook Pro with 300 MB/s). Result: A meagre 65 MB/s via

ddwriting from MacBook Pro to MacPro (rsync25 MB/s). Seeing the 25 MB/s was the moment when we started questioning rsync. Still 65 MB/s are extremely slow. So the SMB implementation on macOS seems… well, questionable. - Tried different ack settings with dd and cp – no luck.

- Finally we found a way to list all the available nsmb.conf options. It is a simple

man nsmb.conf. Caution the online version is outdated!

So we tried a few more settings, among them:

Note: smb_neg=smb3_only is – as I already expected – not a valid setting. protocol_vers_map=4 should be the valid equivalent.

Anyway, none of these settings made a difference for us.

New questions at a glance

Why is rsync such an expensive way to copy one (1!) file. There isn't much to synchronize/compare here, is there? The tcpdump doesn't indicate possible overhead either.

Why are

ddandcpslower than the macOS finder when transferring to a SMB share? It seems when copying with Finder there are significantly fewer acknowledgements in the TCP communication. (Again: The ack setting e.g.delayed_ack=1made no difference for us.)Why does Windows seem to ignore the MTU, sending significantly larger and therefore fewer TCP packets, resulting in the best performance, compared to everything possible via macOS.

This is what the packets look like from macOS (constantly 1514)

And this coming from Windows (up to 26334, varying in size)

You can download full .csv here (25.2 MB), the file names explain what has been copied (OS, transfer method and file size).

Spiff2 Answers

- similar question but has interesting answers, may be you can check this thread especially on comment 5: https://bugzilla.samba.org/show_bug.cgi?id=8512#c5

Here quote 'Peter van Hooft'. Althought i'm not sure testing base on what/which linux dist. and version of rsync too. However: 1. he give us a thought to try --sparse flag if could possible to increases performance. 2. he tested on NFS protocol but met same speed issue, therefore, IT maybe not the protocol (SMB2/3, AFP, etc.) reason.

We use rsync to copy data from one file server to another using NFS3 mounts over a 10Gb link. We found that upping the buffer sizes (as a quick test) increases performance. When using --sparse this increases performance with a factor of fifty, from 2MBps to 100MBps.

- Here is another a interesting inspection about rsync performance.https://lwn.net/Articles/400489/

LWN.net has a Conclusions that performance issue may relevant to kernel even the article posted on 2010 and we can't changed on NAS or MacOS. However, this article give us a thought that kernel issue may cause by checksum (my guessing) calculation.

One thing is clear: I should upgrade the kernel on my Mythtv system. In general, the 2.6.34 and 2.6.35-rc3 kernels give better performance than the old 2.6.27 kernel. But, tinkering or not, rsync can still not beat a simple cp that copies at over 100MiB/s. Indeed, rsync really needs a lot of CPU power for simple local copies. At the highest frequency, cp only needed 0.34+20.95 seconds CPU time, compared with rsync's 70+55 seconds.

also quote comments has this:

Note that rsync always verifies that each transferred file was correctly reconstructed on the receiving side by checking a whole-file checksum that is generated as the file is transferred

update 1: my mistake, this description is for --checksum. check it here:[Improved the description of the --checksum option.] PS, i don't have enough reputation to post more than 2 links.

but i can't find same description from rsync man page, so i am guessing someone is talking about below Bold :

Rsync finds files that need to be transferred using a 'quick check' algorithm (by default) that looks for files that have changed in size or in last-modified time. Any changes in the other preserved attributes (as requested by options) are made on the destination file directly when the quick check indicates that the file's data does not need to be updated.

Therefore, compare to cp/tar/cat, if we use rsync to copy bunch of small or big files it could cause performance issue. BUT due to i'm not able to read source code of rsync therefore i can't confirm this is the ultimate reason.

my idea is keep checking:

- What rsync version is awenro using for testing? Could you update to latest version?

- let see what output when use --stats and -v with --debug=FLAGS

flags

--stats This tells rsync to print a verbose set of statistics on the file transfer, allowing you to tell how effective the rsync algorithm is for your data.

--debug=FLAGS This option lets you have fine-grained control over the debug output you want to see. An individual flag name may be followed by a level number, with 0 meaning to silence that output, 1 being the default output level, and higher numbers increasing the output of that flag (for those that support higher levels). Use --debug=help to see all the available flag names, what they output, and what flag names are added for each increase in the verbose level.

at the last, i would recommend read this supplemental post to get more knowledge.

'How to transfer large amounts of data via network'moo.nac.uci.edu/~hjm/HOWTO_move_data.html

Rsync/ssh encrypts the connection smb does not, if I remember correctly. If it's just one file then one system might be able to read that file and the other not. Also note that to have MTU above 1514 you need to enable 'giants'/'Jumbo Frames' packets the fact that packets need to be further cut down may implicate that there is overhead to 'repack' the packet. The second thing to note is that 'giants'/'Jumbo Frames' need to be enabled on both ends AND EVERYTHING BETWEEN.

1514 is the normal Ethernet frames. 6k-9k frames are called giants or 'Jumbo Frames' depending on the OS/application

I average 80MB/s between my nas ( a PC with VMs one of the VM is the NAS) and my station ( a pc ) with sftp (using sshfs) [giants not enabled] and the device in between is a microtik 2011 (going tru switch chip only)

Remember that MTU is negotiated between two points and that along a path you may have several MTU at different capacity and that the MTU will be the lowest available.

edit : SMB is not very efficient for file transfers .

Run5k